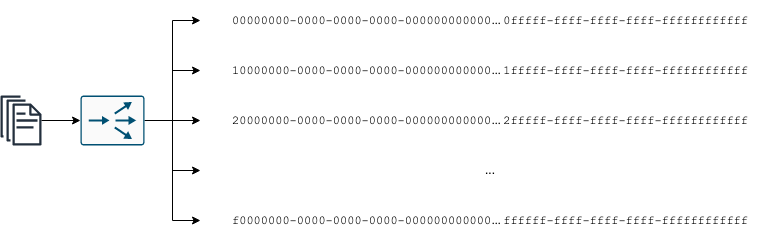

In a project, I have documents identified by UUIDs. For the lack of a better strategy, I decided to partition them based on the value of the UUID identifier. For example, my first partition could be 00000000-0000-0000-0000-000000000000 - 0fffff-ffff-ffff-ffff-ffffffffffff, the second one 10000000-0000-0000-0000-000000000000 and 1fffff-ffff-ffff-ffff-ffffffffffff, and so on.

But… are we sure that the distribution of the generated UUID is uniform? UUID generation guarantees that they are (almost) unique, but does not say anything about how truly random they are.

RFC 4122 - A Universally Unique IDentifier (UUID) URN Namespace, section “4.2. Algorithms for Creating a Time-Based UUID” describes how UUIDs are generated. There are 5 versions, and 4 cannot be considered random since they are based on immutable data such as the host’s MAC address, timestamps that may even collide when requests are very close, or hashes that may collide if their source repeats.

Version 4 is the only one “truly random”. Version 4 utilises 6 bits to identify its version and variant, and has 121 or 122 random bits.

The standard format of the UUID can be used to identify the version: AAAAAAAA-BBBB-CCCC-DDDD-FFFFFFFFFFFF

- the first digit of

CCCCidentifies the version - the first digit of

DDDDidentifies the variant

Java uses version 4 for its random generator

System.out.println(UUID.randomUUID().toString());

output example: 8ff4f274-e699-4de4-9d3e-f973680eaf8d

I wrote a simple program to count the frequency of the first character of random UUIDs.

public class UUIDs {

public static void main(String[] a) {

Map<Character, Long> counters = new HashMap<>();

for (int i = 0; i < 16; i++) {

counters.put(Integer.toHexString(i).charAt(0), 0L);

}

long generated = 0;

while (true) {

String uuid = UUID.randomUUID().toString();

Character c = uuid.charAt(0);

counters.put(c, counters.get(c) + 1);

generated++;

if (generated % 100_000_000 == 0) {

System.out.println("============" + generated + "============");

for (int i = 0; i < 16; i++) {

Character k = Integer.toHexString(i).charAt(0);

System.out.println(k + " = " + counters.get(k));

}

}

}

}

}

I quickly got positive results:

============300000000============

0 = 18744316

1 = 18754636

2 = 18757375

3 = 18749248

4 = 18747804

5 = 18750705

6 = 18748236

7 = 18742448

8 = 18751096

9 = 18754568

a = 18754549

b = 18743361

c = 18752859

d = 18752528

e = 18751776

f = 18744495

I wrote a generalised version to test partitions on the first N numbers. It prints the size of the smallest and largest partitions and their difference in percentage.

public class UUIDs {

private static final int DIGITS = 5;

private static final String KEY = "%0" + DIGITS + "X";

public static void main(String[] a) {

Map<String, Long> counters = new HashMap<>();

for (int i = 0; i < Math.pow(16, DIGITS); i++) {

counters.put(String.format(KEY, i).toLowerCase(), 0L);

}

long generated = 0;

while (true) {

String uuid = UUID.randomUUID().toString();

String c = uuid.substring(0, DIGITS);

counters.put(c, counters.get(c) + 1);

generated++;

if (generated % 100_000_000 == 0) {

System.out.println("============" + generated + "============");

long min = Long.MAX_VALUE;

long max = Long.MIN_VALUE;

for (int i = 0; i < Math.pow(16, DIGITS); i++) {

String k = String.format(KEY, i).toLowerCase();

Long v = counters.get(k);

if (v > max) {

max = v;

}

if (v < min) {

min = v;

}

}

System.out.println("min: " + min);

System.out.println("max: " + max);

System.out.println("diff: " + ((max - min) / (double) max * 100 + "%"));

}

}

}

}

For 2 digits:

============100000000============

min: 388911

max: 392186

diff: 0.8350629548224566%

============200000000============

min: 779131

max: 783243

diff: 0.5249967123868327%

============300000000============

min: 1168957

max: 1174030

diff: 0.43210139434256367%

============400000000============

min: 1559239

max: 1565433

diff: 0.395673273784314%

It is interesting to observe that the difference between min and max decreases with the iterations.

For 5 digits:

============100000000============

min: 54

max: 145

diff: 62.758620689655174%

============200000000============

min: 129

max: 256

diff: 49.609375%

============300000000============

min: 207

max: 366

diff: 43.44262295081967%

============400000000============

min: 290

max: 477

diff: 39.20335429769392%

============500000000============

min: 372

max: 592

diff: 37.16216216216216%

============600000000============

min: 466

max: 694

diff: 32.85302593659942%

Conclusions

If the UUID is version 4 and the implementation is truly pseudo-random, partitioning on the first N digits is a good strategy.

The formula to determine the size of the average partition is very simple:

volume of data / pow(16, number of digits)

So, for example

- 100.000.000 with 2 digits: 100.000.000 / 256 = 390.625

- 600.000.000 with 5 digits: 600.000.000 / 65536 = 572